Go

Match - AlphaGo versus Lee Sedol

Computer Game Research and the U of A

We

went to a lecture the other day (March 14, 2016, which also happened

to be Pi Day, which is a nice math related coincidence) about the

match between human Go expert Lee Sedol and the computer program

AlphaGo. It was presented by Prof Martin Mueller of the University

of Alberta. The U of A has long been involved in AI (Artificial

intelligence) research, via games. In fact, about half of the team

who programmed AlphaGo had U of A backgrounds (alumni, researchers,

post-docs, etc.), so it was a very appropriate venue for such a talk.

That includes the AlphaGo team lead, who got his PhD at the U of A.

The

U of A has also been involved in computer chess (since the 1970’s),

checkers (Professor Schaffer ‘solved’ checkers recently), and

poker. The Go research goes back 30 years or more. Why has the U of

A and other universities used games to do research into AI?

- They’re fun

- They’re well defined

- It’s reasonably easy to measure your progress.

The Game

First

off, I haven’t played the game of Go myself, so this description is

necessarily sketchy and possibly wrong in some details. But, anyway:

- Go is played on various sized boards, but at its highest level the board is 19 by 19 squares (chess is 8 by 8). There are 10 stones aside, black and white.

- This results in a possible game space (permutation space, possible moves, etc.) that is much larger than the number of particles in the observable universe.

- The rules are simple – surround the opposition and claim territory, but the strategies are subtle and complex. A numeric score is calculated, based on territory and captures, and that determines the winner.

- Until recently, computers were rather weak, intermediate level at best. But AlphaGo was a big leap, and now plays at the highest levels, as the match showed.

- There are many hundreds of human professionals, who play 40+ hours per week and make their living that way, including Lee Sedol, who has played from the age of 12 until his current age, mid-30s. So, he has played thousands of games, though AlphaGo has played millions (more on that later).

The Match

Note

that these comments are based on the professor’s descriptions of

the games, along with a bit of commentary from the Wiki entry.

Professor Mueller is a high level Go player himself, so his analysis

should be good.

- Game 1

- Lee “tested” the program with some aggressive moves, but the program responded well.

- The program countered with some unusual and unexpected moves.

- Each side made some critical errors, but the program won.

- Game 2

- Lee played it safe.

- The computer got creative, played an unexpected game.

- Generally considered a masterful game by AlphaGo, who won.

- Game 3

- Lee changed his strategy, with strong early moves.

- Computer responded perfectly to this strategy.

- Almost a perfect, flawless game by AlphGo, who won.

- Many described the program’s play as “almost scary”.

- Game 4

- Lee tried a new strategy.

- He played a “great move” (unconventional), that confused the program.

- It presented many interconnected threats, that seemed to overwhelm the computer. It didn’t seem to have the computing power to respond appropriately.

- The program seemed to flail for about 10 moves, and dug itself into a hole.

- Lee played it safe after that and won.

- The human race rejoiced.

- Game 5

- This actually came the night after the lecture, but the news media reported that the game was extremely close, about as close as a Go game can be.

- AlphaGo made an early “bad mistake” but clawed back for the win, in “deep overtime”.

Lee

noted that he felt like the games were close, and the match could

have gone either way. The fact that the computer didn’t tire, or

experience emotional highs and lows, seemed to be an advantage, in

his opinion. He also felt that he played better when having the

opening move.

A Brief Look at the History of Man-Machine Matches

Professor

Mueller gave a brief overview of the history of matches between human

and computer program. These matches have always been an important

part of Computing Science research, at the U of A and at many other

universites.

- Chess – has always been a big part of the research program. Humans prevailed until about 1997, when Kasparov was defeated by IBM’s Deep Blue. Since then, there have been many other matches, with the best programs tending to win, though not necessarily dominate the best human champions.

- Backgammon – this game has an element of luck, but it also has a significant strategy element, that computer programs using neural nets have optimized, in order to play at or above the best human players. Programs are “nearly perfect” now, according to Professor Mueller.

- Checkers – the U of A has a long involvement with checkers, via the program Chinook. This program beat or equalled the best humans, in the mid-1990s. Since then, the U of A’s Jonathan Schaeffer has used Chinook to “solve” checkers (in 2007), proving that the best a human player can do against Chinook is to play to a draw.

- Othello – a computer program mastered this game inn 1997. It is now widely acknowledged that computer programs can play significantly better than humans.

- Poker – this is now a focus of U of A research. In 2007, the U of A Polaris program became the first program to win an important match with humans. Texas hold-em is now considered solved (2015 article in Nature).

- Go – in 2009 a U of A program won against a top human on a 9 by 9 board. But, until AlphaGo, a computer needed a substantial handicap when playing against human beings. As we now know, that’s no longer the case.

The Science

Before

looking at how AlphaGo makes decisions, it is worth reminding

ourselves of how humans do so:

- They consider alternatives.

- They consider consequences of various alternatives.

- They evaluate the options accordingly.

- They choose the best option, under the circumstances.

AlphaGo

does something like this, using Monte Carlo search strategies, deep

neural networks and reinforcement learning, via simulations. My

description of this process will be a combination of what was said in

the talk, what I read in the paper in Nature (Mastering the game of

Go with deep neural networks and tree search, Jan 28, 2016) and what

I made of it all. Not being an expert in most of the technologies

being used, that could result in some misunderstandings.

For

the record, most of my experience is in the more traditional

statistical methods, such as multiple regression analysis, logistic

regression, ANOVA and the like, with the odd foray into newer methods

such as decision trees. As noted above, the AlphaGo program utilizes

the so-called “machine-learning” methods, primarily.

AlphaGo’s

basic strategy can be broken down into:

- SEARCH

- This is a Monte Carlo tree search, something along the lines of a Decision Trees analysis.

- The branches of the tree search can proliferate enormously, giving incredibly huge trees.

- Thus, AlphaGo has to refine its search space, choosing the most promising paths early on (i.e. the shortest paths that score the most “points”).

- EVALUATE

- Sometimes evaluation can be easy for a computer program, as it is in real life for human beings. But more often, it is “hard”, if the problem is complex. This is very familiar to us from our everyday human experience, as well.

- So, as the search progresses, the current search path has to be evaluated. A sort of evaluation function is needed for this, one that can look at a Go board search path and estimate the probability of a win from that.

- SIMULATE

- AlphaGo’s evaluation function is built up mostly from simulations that it has done, with sub-programs playing millions of games against each other, utilizing slightly different strategies (unsupervised learning).

- It can then base its evaluation function on the results of all of those games. Since it has played millions of games to conclusions, it can estimate the likelihood of various board configurations, choosing paths that are likely to ultimately result in a win.

- Utilizing the experience of all these games, also helps it narrow down the search space to a much smaller subset, more realistic space than a comprehensive search would require.

- It has long been assumed that this is what humans do when playing games like Go - through extensive experience and a particular mental aptitude for the game in question, they can narrow down the search space to those that are most relevant to the game.

- It should be noted that the program has first been trained with the tens of thousands of games among the best humans, to begin the reinforcement learning. The simulations noted above come after that initial training (supervised learning).

- Note that the paper is still somewhat vague on the details of the evaluation function or matrix. It seems clear that the accuracy and predictive power of the “value network” has been enhanced enormously by the unsupervised learning that the millions of supervisions gave it.

It

should also be noted that the AlphaGo program benefitted from:

- Plenty of high speed hardware, consisting of thousands of cores (CPUs and GPUs).

- Millions of simulations.

- Much testing and fine-tuning by human programmers and AI experts.

- I should also note that only in a computing science lecture could a phrase like “reinforcement through self-play” be said without cracking an ironic smile.

One

issue with neural nets algorithms that is noted in the literature is the “black box” problem.

They take input signals, send them through a series of intervening

neural layers, and ultimately come up with output signals. The

neural nets can be altered via various algorithms (back propagation,

etc.), and the nets that produce the desired effect on the output

variable of interest can be reinforced and selected. This can

produce a very good predictive model, but it is still a black box.

You know what nets worked, but you don’t necessarily know why.

This is in contrast to a more traditional prediction method like

regression, whereby you know what variables had the greatest effect

in your predictive model, and can therefore understand something of

the real-world processes behind the model.

For

what it’s worth, my overall take on the match is that AlphaGo’s

main advantage was primarily that it had the benefit of playing

millions of games, and thereby creating a much richer evaluation

function that other programs, and most humans can manage. For a

human to build up a similar database, would take multiple hundreds of

lifetimes.

Nonetheless,

it is interesting that Lee Sedol managed to confuse and beat AlphaGo

in one game, and essentially play to a draw in another, even though

he had played perhaps ten thousand games, at most (a game a day for

twenty years would be about 6500 games). So, there must still be an

“X-factor” going on in the human neural net, that hasn’t yet

been discovered. Some of us like to think that will continue to be

the case, indefinitely :).

The Future

It

is hoped that the scientific advances in producing programs like

AlphaGo can be used for more general and pressing real-world

problems. Basically, any problem that can be modelled in similar way

as the Go problem could yield to these methods. That would include

any complex problem that involved an “easy” problem statement, a

solution that can be kick-started by expert human knowledge, and then

fine-tuned via millions of iterations of self-play simulations.

Since

AlphaGo is a product of a Google owned company, applications like

self-driving cars come to mind. Some others that were mentioned were

general image processing, speech recognition, medical imaging

diagnostics, and drug research. It should be borne in mind, though,

that many real-world problems don’t have these features, so the

“AlphaGo method” is not a general purpose one.

One

issue that Professor Mueller brought up, was the movement of AI

research from the university sector to the corporate sector. This

can be a concern, as the corporate sector has much more lavish

funding, at least for areas that have a high profit potential. Will

academic research be left in the dust? It’s hard to say - the

corporate world still needs the trained personnel that universities

provide, as well as the ability to research problems that have no

obvious profit potential. So, academic research will probably remain

vital, but it might mean that the collaboration between universities

and corporations will grow, with all of the attendant problems and

opportunities that would entail.

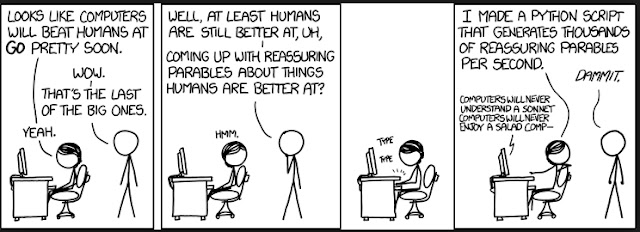

Here's XKCD, with a comment on computer-human matchups.

Obligatory Star Trek reference: Computers will never beat us at Fizbin.

No comments:

Post a Comment